Running a Linux VM in Qemu

2015-03-05 14:05:14

Having a running Linux VM at disposal is a must for any web developer.

I was looking for free and simple way to do so.

QEMU : Qemu is a free and open-source VM software that allows to run disk image from any host OS.

Windows x86-64 build can be downloaded here : http://qemu.weilnetz.de/w64/

Debian i386 : my preferred linux distro for any server installation.

Debian website, now, emphasize on not downloading an ISO directly but use "jigdo" instead. Jigdo is a tools that automatically downloads Debian distro packages and build a bootable ISO from it. I must say it's a flawless process even on windows and very conveniently guarantees that installed packages are from the lastest available version.

Jigdo is avalaible here : http://atterer.org/jigdo/

I've pointed jigdo the first Debian CD : http://cdimage.debian.org/debian-cd/7.8.0/i386/jigdo-cd/debian-7.8.0-i386-CD-1.jigdo

And after 15 minutes, I've got my ready to install debian CD.

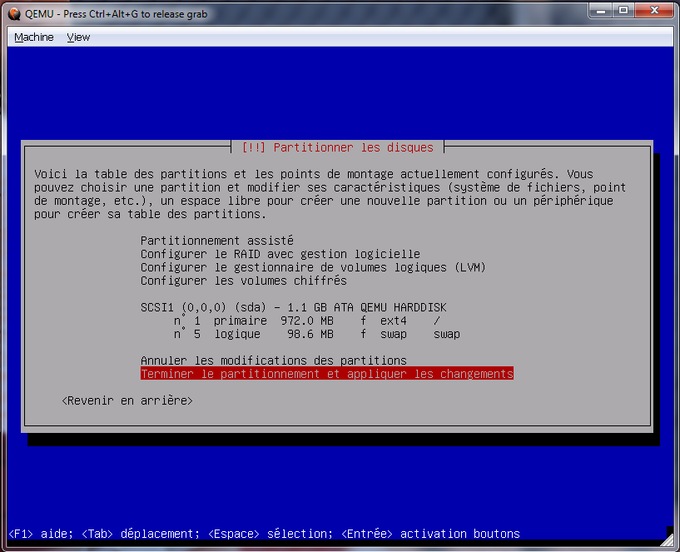

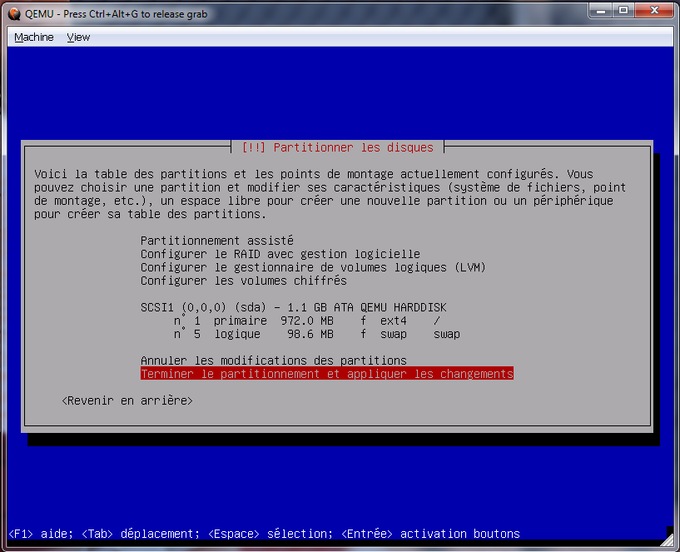

Next step is to create the image on which to install our debian distro:

1GB is enough for an headless linux server.

Then boot our debian CD with debian.img as hard drive:

qemu launches and we're ready to install our distro on our newly created disk image.

I was looking for free and simple way to do so.

QEMU : Qemu is a free and open-source VM software that allows to run disk image from any host OS.

Windows x86-64 build can be downloaded here : http://qemu.weilnetz.de/w64/

Debian i386 : my preferred linux distro for any server installation.

Debian website, now, emphasize on not downloading an ISO directly but use "jigdo" instead. Jigdo is a tools that automatically downloads Debian distro packages and build a bootable ISO from it. I must say it's a flawless process even on windows and very conveniently guarantees that installed packages are from the lastest available version.

Jigdo is avalaible here : http://atterer.org/jigdo/

I've pointed jigdo the first Debian CD : http://cdimage.debian.org/debian-cd/7.8.0/i386/jigdo-cd/debian-7.8.0-i386-CD-1.jigdo

And after 15 minutes, I've got my ready to install debian CD.

Next step is to create the image on which to install our debian distro:

qemu-img create debian.img 1000M

1GB is enough for an headless linux server.

Then boot our debian CD with debian.img as hard drive:

qemu -cdrom debian-7.8.0-i386-CD-1.iso -hda debian.img -boot d

qemu launches and we're ready to install our distro on our newly created disk image.

Grass simulation and infinite terrain using DX11 in C#

2015-03-05 08:36:04

Not really new stuff as I've coded this 3 years ago but I made a nice video about it. Love how the background sounds add realism to the scene !

Implementation details here :

http://www.nicolasmy.com/projects/64-Grass-rendering-in-C#-Direct3D-11-with-SlimDX

Implementation details here :

http://www.nicolasmy.com/projects/64-Grass-rendering-in-C#-Direct3D-11-with-SlimDX

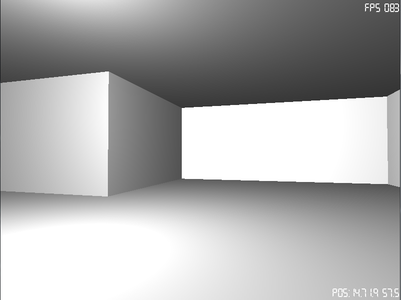

HDR-style bloom effect

2015-03-04 16:28:11

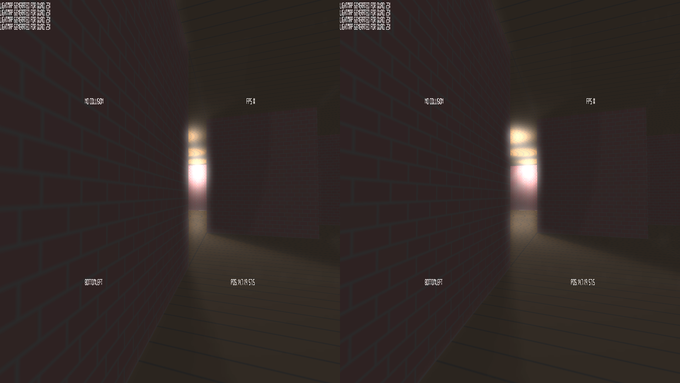

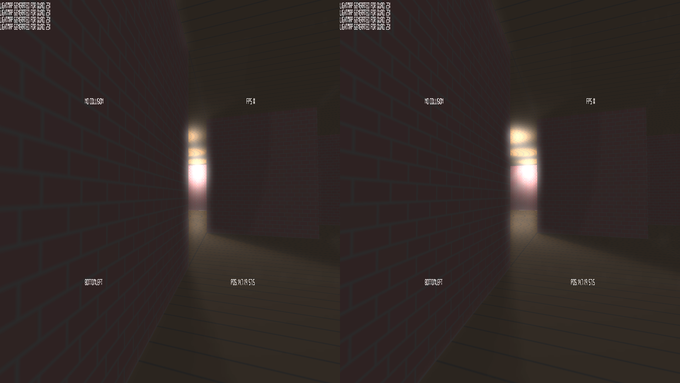

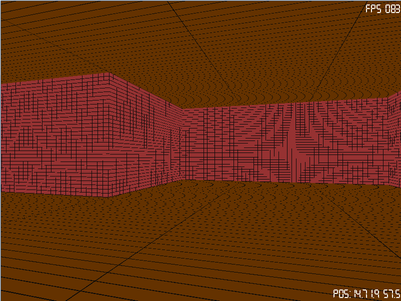

One can add some realism in 3D rendering by trying to mimic light saturation and glare in the human eyes caused by high contrast lighting conditions as seen in many modern games.

1. Render scene with lightmap only to a low resolution (1/8th) of the screen resolution

2. Blur the rendered scene using a gaussian blur shader.

3. Stretch image to the current resolution and blend it to the current scene

Here is the result in current stereo vision rendering :

1. Render scene with lightmap only to a low resolution (1/8th) of the screen resolution

2. Blur the rendered scene using a gaussian blur shader.

3. Stretch image to the current resolution and blend it to the current scene

Here is the result in current stereo vision rendering :

Analog gamepad support in FPS

2015-02-03 23:48:28

Having a gamepad support for an Oculus Rift Demo is a great addition as keyboard usage can be somewhat clumsy while wearing VR googles.

In the same way as for others inputs, we start by polling joystick input events, but this time, we have to keep track of the latest stick value for each axis we are working with.

Thus on each frame, we can use latests sticks values to update our camera accordingly.

Axis 0 and 1 are for direction movements : stored axis value are directly used to generate forward, backward, left and right vector motion.

Axis 2 and 3 are used in the same way that mouse motion is used to compute heading and orientation change except that this time we don't use mouse delta value but latest values to update the camera on each frame because stick are maintained during the whole camera change unlike to mouse where only the delta value between two events poll is expressing the camera change.

A deadzone constant has to be configured as sticks values don't always get back to zero when an analog stick is relieved.

With the SDL library, axis value is an integer between -32768 and 32768, a deadzone value of 500 is a good fit for an Xbox 360 gamepad.

Maze generation

2015-01-25 22:24:41

I didn't know that maze generation was actually an open subject

Hence there's a wikipedia page about it : http://en.wikipedia.org/wiki/Maze_generation_algorithm

So here is my Depth-first search implementation. It's pretty heavy on recursion so it can only produce limited size maze before the deaded stack overflow.

I've forgot that pure functions, ie: function which result do not rely on another recursion result, are optimised by being reduced to iterations by the compiler. This is called tail-recursion

Hence there's a wikipedia page about it : http://en.wikipedia.org/wiki/Maze_generation_algorithm

So here is my Depth-first search implementation. It's pretty heavy on recursion so it can only produce limited size maze before the deaded stack overflow.

I've forgot that pure functions, ie: function which result do not rely on another recursion result, are optimised by being reduced to iterations by the compiler. This is called tail-recursion

#include <stdio.h>

#include <stdlib.h>

const unsigned int step = 20;

unsigned int * g_node_stack;

unsigned int g_node_position;

bool * g_maze_grid;

void displayMaze() {

for (int i = 0;i < step;i++) {

for (int j = 0;j < step;j++) {

if (g_maze_grid[j+i*step]) {

printf("1");

} else {

printf("0");

}

}

printf("\n");

}

}

unsigned int checkMaze(unsigned int position,unsigned int linesize) {

int count = 0;

unsigned int x = position % linesize;

unsigned int y = position / linesize;

if (y > 0)

if (!g_maze_grid[position-linesize]) {

count++;

}

if (x < linesize-1)

if (!g_maze_grid[position+1]) {

count++;

}

if (y < linesize - 1)

if (!g_maze_grid[position+linesize]) {

count++;

}

if (x > 0)

if (!g_maze_grid[position-1]) {

count++;

}

return count;

}

unsigned int hasRoomToDig(unsigned int position,unsigned int linesize) {

unsigned int x = position % linesize;

unsigned int y = position / linesize;

bool vmap[4];

bool hasExit = false;

for (int i = 0;i < 4;i++) {

vmap[i] = false;

}

if (y > 0) {

if (g_maze_grid[position-linesize] == false) {

if (checkMaze(position-linesize,linesize) >= 3) {

vmap[0] = true;

hasExit = true;

}

}

}

if (y < linesize - 1) {

if (g_maze_grid[position+linesize] == false) {

if (checkMaze(position+linesize,linesize) >= 3) {

vmap[1] = true;

hasExit = true;

}

}

}

if (x < linesize-1) {

if (g_maze_grid[position+1] == false) {

if (checkMaze(position+1,linesize) >= 3) {

vmap[2] = true;

hasExit = true;

}

}

}

if (x > 0) {

if (g_maze_grid[position-1] == false) {

if (checkMaze(position-1,linesize) >= 3) {

vmap[3] = true;

hasExit = true;

}

}

}

if (hasExit) {

int i;

while (vmap[i = rand()%4] == false);

switch (i) {

case 0 : return position-linesize;

case 1 : return position+linesize;

case 2 : return position+1;

case 3 : return position-1;

}

}

return 0;

}

void digRoom(unsigned int position,unsigned int linesize) {

unsigned int newpos;

g_maze_grid[position] = true;

if (newpos = hasRoomToDig(position,linesize)) {

g_node_stack[g_node_position] = newpos;

g_node_position++;

digRoom(newpos,linesize);

} else if (g_node_position > 0){

g_node_position--;

digRoom(g_node_stack[g_node_position],linesize);

}

}

int main(int argc,char **argv) {

g_node_stack = new unsigned int[step * step];

for (int i = 0;i < step * step;i++) {

g_node_stack[i] = 0;

}

g_node_position = 0;

g_maze_grid = new bool[step * step];

for (int i = 0;i < step * step;i++) {

g_maze_grid[i] = false;

}

digRoom(50,step);

displayMaze();

}

Oculus Rift : head tracking

2015-01-18 20:36:20

Implementing head-tracking using the Oculus Rift SDK is easy except that parts of the current documentation (ie: Oculus Developer Guide 0.4.4) seems outdated/inaccurate on some points.

First the piece of code for head-tracking activation :

Then the code to poll head tracking data during the rendering loop :

Head-tracking datas are expressed as a quaternion and have to be converted to euler angles rotations (pitch, yaw and roll around euler axis).

First the piece of code for head-tracking activation :

// Start the sensor which provides the Rift’s pose and motion.

ovrHmd_ConfigureTracking(hmd, ovrTrackingCap_Orientation |

ovrTrackingCap_MagYawCorrection |

ovrTrackingCap_Position, 0);

Then the code to poll head tracking data during the rendering loop :

Head-tracking datas are expressed as a quaternion and have to be converted to euler angles rotations (pitch, yaw and roll around euler axis).

// Query the HMD for the current tracking state.

ovrTrackingState ts = ovrHmd_GetTrackingState(hmd, ovr_GetTimeInSeconds());

if (ts.StatusFlags & (ovrStatus_OrientationTracked | ovrStatus_PositionTracked))

{

ovrPoseStatef pose = ts.HeadPose;

glm::quat quat = glm::quat(pose.ThePose.Orientation.w,pose.ThePose.Orientation.x,

pose.ThePose.Orientation.y,pose.ThePose.Orientation.z);

glm::vec3 pose3f = glm::eulerAngles(quat);

this->camera->MoveOvr(-pose3f[0],pose3f[1],pose3f[2]);

}

Rift SDK : stereo vision

2015-01-14 01:15:46

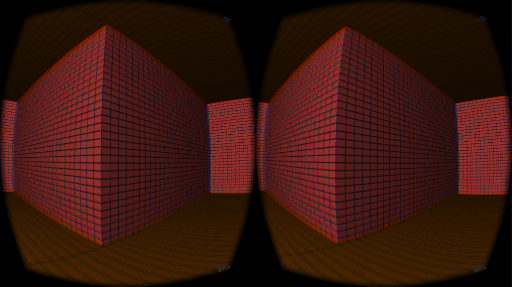

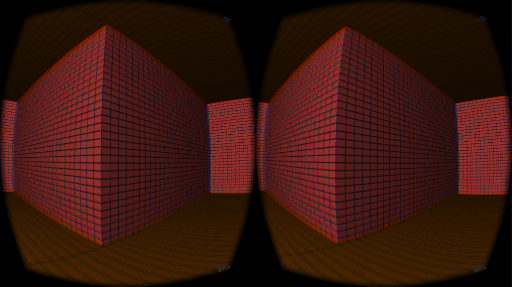

I have managed to implement stereo vision. It was very easy knowing that in the Oculus Rift both camera have to be parralels hence there is no notion of convergence like it stereo vision for 3D TVs.

So with just an offset to left and to the right, I can admire my own procedural world in all its volume glory !

So with just an offset to left and to the right, I can admire my own procedural world in all its volume glory !

Developing with the Oculus Rift DK2

2015-01-11 23:00:55

I own an Oculus RIFT DK2 since about 6 months and I didn't tried to develop for it until recently. I've got my early OpenGL project up and running quite easily, at least for the rendering part.

There are two ways of having the graphic output on DK2 :

* DIY method : create your own barrel distortion shader and use it to render your scene

* Plug and play method : render your scene to a framebuffer and call the DK2 API at draw process end and let the API do the camera distortion.

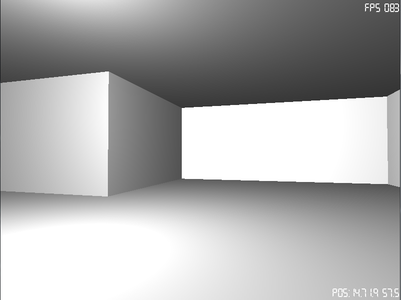

I've started with the easy way and I've managed to have something showing up quickly. One can see that it's obviously still wrong as that screen is split between the two eyes instead of having the whole scene on each eye but that's not bad for a start.

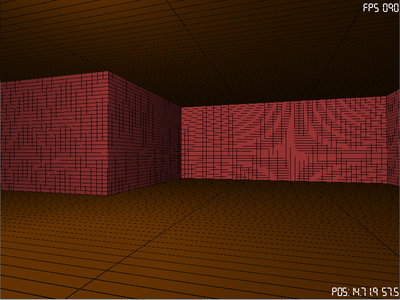

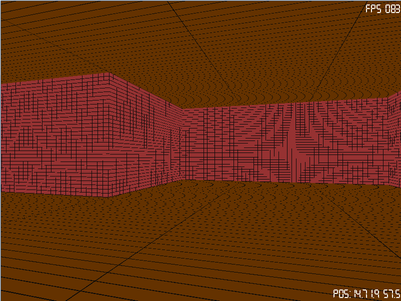

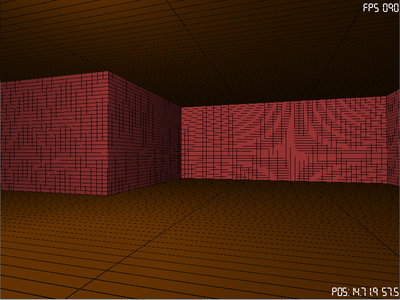

Rendering the year 2000 way

2015-01-07 01:42:04

By writing a first person 3D game, I am currently revisiting some old fashioned rendering techniques : the Quake style rendering.

One of the many innovations that were featured in the Quake engine was the usage of a lightmap texturing in addition to the standard diffuse texture (ie: the plain flat texture).

Lightmap is a texture containing the lighting data for a 3D model in its environnement. Because of the poor performance of these days hardware, it was impossible to compute accurate lighting on the whole environnement in real-time, so that lighting has to be pre-baked then stored inside a texture.

I remember back then that the leading graphic hardware was the Voodoo 2 graphic card from 3DFX, which boasted amazing performance by being the very first card to feature "single-pass dual texturing" especially for the Quake game.

This features was used to mix the diffuse and lightmap directly in one draw call.

In the current engine I am writing, diffuse textures are procedurally generated.

I am computing the lightap texture, currently only for one light source.

I am generating a 256x256 lightmap texture for each corresponding wall. And each pixel of the lightmap is individually computed by evalutating the amount of light received by the physical element of the wall a this position (using Lambertian reflectance).

Final rendering is obtained by multiplying the color component of diffuse texture with the lightmap one.

Nowadays, it is easy to compute real-time per pixel lighting using a shader program (a piece of code that is executed by the rendering pipeline). It's still an expensive calculation that would often be reserved for objects close to the camera whereas far off objects will be rendered using simpler algorithm and light would still be pre-baked, especially in scene where lighting conditions do not change over time.

This technique is also used in mobile device world where graphic processing performance does not allows for complex real-time calculations on a fragment (ie: pixel) level.

http://en.wikipedia.org/wiki/Lambertian_reflectance

http://en.wikipedia.org/wiki/Quake_(video_game)

http://en.wikipedia.org/wiki/Quake_engine

Here is the lightmap generation code for one tile.

By the way, this is one of the few time where C++ operator overloading feature does really shine, being able to write this :

One of the many innovations that were featured in the Quake engine was the usage of a lightmap texturing in addition to the standard diffuse texture (ie: the plain flat texture).

Lightmap is a texture containing the lighting data for a 3D model in its environnement. Because of the poor performance of these days hardware, it was impossible to compute accurate lighting on the whole environnement in real-time, so that lighting has to be pre-baked then stored inside a texture.

I remember back then that the leading graphic hardware was the Voodoo 2 graphic card from 3DFX, which boasted amazing performance by being the very first card to feature "single-pass dual texturing" especially for the Quake game.

This features was used to mix the diffuse and lightmap directly in one draw call.

In the current engine I am writing, diffuse textures are procedurally generated.

I am computing the lightap texture, currently only for one light source.

I am generating a 256x256 lightmap texture for each corresponding wall. And each pixel of the lightmap is individually computed by evalutating the amount of light received by the physical element of the wall a this position (using Lambertian reflectance).

Final rendering is obtained by multiplying the color component of diffuse texture with the lightmap one.

Nowadays, it is easy to compute real-time per pixel lighting using a shader program (a piece of code that is executed by the rendering pipeline). It's still an expensive calculation that would often be reserved for objects close to the camera whereas far off objects will be rendered using simpler algorithm and light would still be pre-baked, especially in scene where lighting conditions do not change over time.

This technique is also used in mobile device world where graphic processing performance does not allows for complex real-time calculations on a fragment (ie: pixel) level.

http://en.wikipedia.org/wiki/Lambertian_reflectance

http://en.wikipedia.org/wiki/Quake_(video_game)

http://en.wikipedia.org/wiki/Quake_engine

Here is the lightmap generation code for one tile.

Texture * TextureGenerator::generateLightmapTexture(unsigned int width,

unsigned int height,T_TextureLightSource * source, T_TextureQuad * quad) {

glm::vec3 x_increment;

glm::vec3 y_increment;

glm::vec3 p1 = glm::vec3(quad->p1[0],quad->p1[1],quad->p1[2]);

glm::vec3 p2 = glm::vec3(quad->p2[0],quad->p2[1],quad->p2[2]);

glm::vec3 p3 = glm::vec3(quad->p3[0],quad->p3[1],quad->p3[2]);

glm::vec3 p4 = glm::vec3(quad->p4[0],quad->p4[1],quad->p4[2]);

glm::vec3 normal = glm::cross(p2-p1,p4-p1);

glm::vec3 light_position = glm::vec3(source->position[0],

source->position[1],source->position[2]);

unsigned char * texture_data = (unsigned char*)malloc(sizeof(

unsigned char) * width * height * 4);

x_increment = (p2 - p1) / (float)width;

y_increment = (p4 - p1) / (float)width;

for (int i = 0;i < height;i++) {

for (int j = 0;j < width;j++) {

glm::vec3 current = p1 + (float)i*y_increment + (float)j*x_increment;

glm::vec3 lightdir = light_position - current;

normal = glm::normalize(normal);

lightdir = glm::normalize(lightdir);

float dot = glm::dot(normal,lightdir);

if (dot > 0.0f) {

texture_data[(i*width+j)*4 + 0] =

(dot * source->color[0]*255 + 30) > 255 ? 255 : dot * source->color[0]*255 + 30;

texture_data[(i*width+j)*4 + 1] =

(dot * source->color[1]*255 + 30) > 255 ? 255 : dot * source->color[1]*255 + 30;

texture_data[(i*width+j)*4 + 2] =

(dot * source->color[2]*255 + 30) > 255 ? 255 : dot * source->color[2]*255 + 30;

texture_data[(i*width+j)*4 + 3] = 255;

} else {

texture_data[(i*width+j)*4 + 0] = 30;

texture_data[(i*width+j)*4 + 1] = 30;

texture_data[(i*width+j)*4 + 2] = 30;

texture_data[(i*width+j)*4 + 3] = 255;

}

}

}

return new Texture(width,height,(unsigned char*)texture_data);

}

By the way, this is one of the few time where C++ operator overloading feature does really shine, being able to write this :

glm::vec3 normal = glm::cross(p2-p1,p4-p1);

Java jvm wide properties

2014-11-25 15:19:23

I can't believe this kind of code is recommended on some websites :

There's so many way this code can break :

* System properties are jvm wide, so that it will modify behavior of applications that are running on the same jvm.

* Not thread-safe : anything can happen between setProperty and newInstance on concurrent running apps.

System.setProperty("javax.xml.parsers.DocumentBuilderFactory",

"org.apache.xerces.jaxp.DocumentBuilderFactoryImpl");

final DocumentBuilderFactory factory = DocumentBuilderFactory.newInstance();

There's so many way this code can break :

* System properties are jvm wide, so that it will modify behavior of applications that are running on the same jvm.

* Not thread-safe : anything can happen between setProperty and newInstance on concurrent running apps.